AKS with External DNS

Using external-dns add-on with Azure DNS and AKS

Update (2021年09月11日): added/enhanced verification sections

This article covers using ExternalDNS to automate updating DNS records when applications are deployed on Kubernetes. This is needed if you wish to use a public endpoint and would prefer a friendlier DNS name rather than a public IP address.

This article will configure the following components:

- Azure cloud resources: Azure DNS Zone and AKS

- Kubernetes add-ons: ExternalDNS

- Demos: hello-kubernetes and Dgraph (Dgraph helm chart)

Articles in the Series

These articles are part of a series, and below is a list of articles in the series.

- AKS with external-dns:

servicewithLoadBalancertype - AKS with ingress-nginx:

ingress(HTTP) - AKS with cert-manager:

ingress(HTTPS) - AKS with GRPC and ingress-nginx:

ingress(GRPC and HTTPS)

Requirements

Azure Subscription

You will need get an Azure subscription and Sign in with Azure CLI.

Domain name

For this guide you can can use a public domain name, and point that domain or a subdomain to the Azure DNS zone. This guide will use the fictional name example.com as an example.

Alternatively, you can use a private domain name, such as example.internal. This will require using either local domain server, editing the local /etc/hosts file, using an SSH jump host or VPN to access the Azure DNS service, or a combination of these.

Required Tools

These tools are required.

- Azure CLI tool (

az): command line tool that interacts with Azure API - Kubernetes client tool (

kubectl): command line tool that interacts with Kubernetes API - Helm (

helm): command line tool for “templating and sharing Kubernetes manifests” (ref) that are bundled as Helm chart packages. - helm-diff plugin: allows you to see the changes made with

helmorhelmfilebefore applying the changes. - Helmfile (

helmfile): command line tool that uses a “declarative specification for deploying Helm charts across many environments” (ref).

Optional Tools

I highly recommend these tools:

- POSIX shell (

sh), e.g. GNU Bash (bash) or Zsh (zsh): these scripts in this guide were tested using either of these shells on macOS and Ubuntu Linux. - curl (

curl): tool to interact with web services from the command line. - jq (

jq): a JSON processor tool that can transform and extract objects from JSON, as well as providing colorized JSON output greater readability.

Project Setup

As this project has a few moving parts (Azure DNS, AKS, ExternalDNS with example applications Dgraph and hello-kubernetes), these next few will help keep things consistent.

Project File Structure

The following structure will be used:

~/azure_externaldns/

├── env.sh

├── examples

│ ├── dgraph

│ │ └── helmfile.yaml

│ └── hello

│ └── helmfile.yaml

└── helmfile.yamlWith either Bash or Zsh, you can create the file structure with the following commands:

Project Environment Variables

Setup these environment variables below to keep things consistent amongst a variety of tools: helm, helmfile, kubectl, jq, az.

If you are using a POSIX shell, you can save these into a script and source that script whenever needed. Copy this source script and save as env.sh:

You will be required to change AZ_DNS_DOMAIN to a domain that you have registered, as example.com is already owned. Make sure you transfer domain control to Azure DNS.

Additionally, you can use the defaults or opt to change values for AZ_RESOURCE_GROUP, AZ_LOCATION, and AZ_CLUSTER_NAME.

This env.sh file will be used for the rest of the project. When finished with the necessary edits, source it:

source env.shProvisioning Azure Resources

The Azure cloud resources can be created with the following steps:

AKS (Azure Kubernetes Service) configured with managed identity enabled, which is now enabled by default.

NOTE: Earlier guides on the public Internet may document the older name of Managed Identity called MSI (Managed Service Identity). These are the same thing.

NOTE: Earlier guides on the public Internet, may document a process of creating a service principal with a client secret (password). While this can still be used, this process is more complex no longer needed with managed identities.

Verifying Azure DNS Zone

Gather information on a particular domain with using the built-in query flag with JMESPath syntax:

az network dns zone list \

--query "[?name=='$AZ_DNS_DOMAIN']" --output tableThis should something like the following (changing the domain name to the one you specified of course):

Verifying Azure Kubernetes Service

When completed, you should be able to see resources already allocated in Kubernetes with this command:

kubectl get all --all-namespacesThe results should be similar to this:

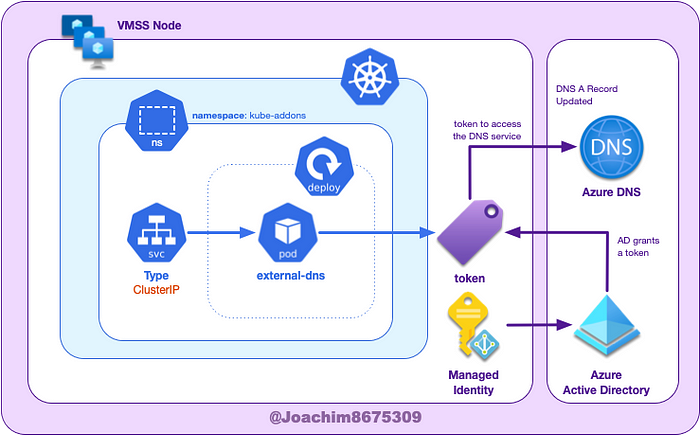

Authorizing access Azure DNS

The AKS cluster must be configured to allow external-dns pod to access access Azure DNS zone. This can be done using the kubelet identity, which is the user assigned managed identity that assigned to the nodepools (managed by VMSS) before their creation.

📔 NOTE: A managed identity (previously called MSI or managed service identity) is a wrapper around service principals to make management simpler. Essentially, they are mapped to an Azure resource, so that when the Azure resource no longer exists, the associated service principal will be removed automatically.

⚠️ WARNING: Using the kublet identity may be fine for limited test environments, this SHOULD NEVER BE USED IN PRODUCTION. This violates the principal of least privilege. Alternatives would configuring access are AAD Pod Identity or the more recent Workload Identity.

First we want to get the scope, that has a format like this:

/subscriptions/<subscription id>/resourceGroups/<resource group name>/providers/Microsoft.Network/dnszones/<zone name>/Attach role to AKS (kubelet identity object id)

Fetch the AZ_DNS_SCOPE and AZ_PRINCIPAL_ID, and then grant access grant access to this specific Azure DNS zone:

Verify Role Assignment

You can verify the results with the following commands:

This should show something like the following:

Installing External DNS

Now comes the fun part, installing the automation so that services with endpoints can automatically register records in the Azure DNS zone when deployed.

Using Helmfile

Copy the following below and save as helmfile.yaml:

Make sure that appropriate environment variables are setup before running this command: AZ_RESOURCE_GROUP, AZ_DNS_DOMAIN, AZ_TENANT_ID, AZ_SUBSCRIPTION_ID. Otherwise, this script will fail.

Once ready, simply run:

helmfile applyVerify external-dns configuration

As a sanity check in case things go wrong, you can verify the configuration in the azure.json file.

kubectl get secret external-dns \

--namespace kube-addons \

--output jsonpath="{.data.azure\.json}" | base64 --decodeThis should show something like:

The tenantId and suscriptonId are obviously obfuscated. This should match the same values set the environment variables AZ_TENANT_ID and AZ_SUBSCRIPTION_ID after running source env.sh.

Testing ExternalDNS is Running

You can test that the external-dns pod is running with:

If there are errors in the logs about authorization, you know immediately that the setup is not working. You’ll need to revisit that the appropriate access was added to a role and attached to the correct service principal that was created on VMSS node pool workers.

Example using hello-kubernetes

The hello-kubernetes is a simple application that prints out the pod names. This application can demonstrate that automation with ExternalDNS and Azure DNS have worked correctly.

A service of LoadBalancer type will be configured with the required annotation to tell ExternalDNS the desired DNS A record to configure. ExternalDNS will scan services for this annotation, and then trigger the automation.

Deploy hello-kubernetes using helmfile

Copy and paste the following manifest template below as examples/hello/helmfile.yaml:

We can deploy this using the following command:

source env.shhelmfile --file examples/hello/helmfile.yaml apply

Verify Hello Kubernetes is deployed

kubectl get all --namespace helloYou similar resources like this deployed:

Verify DNS Records were created

Run the following command to verify that the records were created by external-dns:

This should look something like the following:

To verify a specific record entries, this can be done with a JMESPath query:

The results should look something like the following:

Verify Hello Kubernetes works

After a few moments, you can check the results http://hello.example.com (substituting example.com for your domain).

Cleanup Hello Kubernetes

The following will delete kubernetes-hello and the pubic ip address:

helm delete hello-kubernetes --namespace helloExample using Dgraph

Dgraph is a distributed graph database and has a helm chart that can be used to install Dgraph into a Kubernetes cluster. You can use either helmfile or helm methods to install Dgraph.

About the Illustration: The above will show the relationship with networking resources and Kubernetes service configuration using an external load balancer. In AKS, a single load balancer is assigned to the cluster, which will map multiple public IP addresses to the appropriate Kubernetes services.

In this scenario, 20.99.224.105 will be mapped to the Dgraph Alpha service for ports 8080 and 9080, while 20.99.224.114 will map port 80 to Dgraph Ratel service. Additionally, Azure DNS will have alpha and ratel address records that point to these two respective public IP addresses.

Securing Dgraph

Normally, you would only have the database available through a private endpoints, not available on the public Internet. However for this demonstration purposes, public endpoints through the service of type LoadBalancer will be used.

We can take precaution to add a whitelist or an allow list that will contain your IP address as well as AKS IP addresses used for pods and services. You can do that in Bash or Zsh with the following commands:

Deploy Dgraph with Helmfile

Copy the following gist below and save as examples/dgraph/helmfile.yaml:

When ready, run the following:

helmfile --file examples/dgraph/helmfile.yaml applyVerify Dgraph is deployed

Check that the services are running:

kubectl --namespace dgraph get allThis should output something similar to the following:

Verify DNS record updates

Run the following command to verify that the records were created by external-dns:

This should additional ratel and alpha records in the list:

To verify a specific address record entries (again using the spiffy JMESPath query):

With the addition of ratel and alpha, there should be three public IP addresses now:

Verify Dgraph Alpha health check

Verify that the Dgraph Alpha is accessible by the domain name (substituting example.com for your domain):

curl --silent http://alpha.example.com:8080/health | jqThis should output something similar to the following:

Test solution with Dgraph Ratel web user interface

After a few moments, you can check the results http://ratel.example.com (substituting example.com for your domain).

In the dialog for Dgraph Server Connection, configure the domain, e.g. http://alpha.example.com:8080 (substituting example.com for your domain)

From there, you can run through some tutorials like https://dgraph.io/docs/get-started/ to create a small Star Wars graph database and run some queries.

Cleanup Dgraph resources

You can remove Dgraph resources, public ip address, and external disks with the following:

helm delete demo --namespace dgraph

kubectl delete pvc --namespace dgraph --selector release=demoCleanup the Project

You can cleanup resources that can incur costs with the following:

Remove EVERYTHING with one command

This command is dangerous. Verify you are deleting only the designated resource group.

az group delete --resource-group $AZ_RESOURCE_GROUPRemove just the AKS Cluster

This will remove only the AKS clusters and associated resources:

az aks delete \

--resource-group $AZ_RESOURCE_GROUP \

--name $AZ_CLUSTER_NAMERemove just the Azure DNS Zone

az network dns zone delete \

--resource-group $AZ_RESOURCE_GROUP \

--name $AZ_DNS_DOMAINResources and Further Reading

Here are some resources that I have come across that may be useful.

Blog Source Code

Related Articles

- DevOps Tools: Introducing Helmfile: a brief overview of helm and helmfile.

- Azure DNS Automation: how to setup Azure DNS Zone to be public domain or subdomain.

- Azure Kubernetes Server: how to provision basic provision Kubernetes cluster using AKS (Azure Kubernetes Service) cluster with Azure CLI tools (

az) - Extending GKE with External DNS: How to setup exertnal-dns for Cloud DNS on GKE (Google Kubernetes Engine).

External DNS

Tools

Azure Identity

- Application and service principal objects in Azure Active Directory

- Create an Azure service principal with the Azure CLI

Azure DNS

Azure Kubernetes Service

Helm Charts

- Dgraph helm chart (official)

- ExternalDNS (Bitnami) helm chart

Document History

- 2021年09月11日: added more verification instructions; changed

westustowestus2aswestusdoesn’t support HA; corrected illustration where only 1 LB is used for all external LB allocations under AKS; added illustrations on K8S components and Azure resources used. - 2021年09月05日: converted multi-line code blocks to gists as difficult to copy text from Medium.

- 2021年06月28日: removed

envsubt&terraformfor simplicity

Conclusion

On the surface, installing this seems easy:

automate DNS during deployments of applications on Kubernetes (AKS) flavor with ExternalDNS.

As you can see, it is a little more complex, as configuring cloud resources, both Kubernetes and Azure, can zigzag through a number of tools.

Takeaways

The important takeaways from this article include:

- ExternalDNS add-on with an Azure DNS zone

- Using kubelet identity (managed identity) to grant access to Azure DNS.

- using an external load balancer with an

service(typeLoadbalancer)

Some extra takeaways are exposure to:

- Kubernetes tools:

kubectl,helm,helmfile - Crafting JSON queries with JMESPath or jq.

- the distribute graph database Dgraph

Where to go from here?

Here are some more advanced topics around either external-dns or provisioning AKS:

- ExternalDNS with Ingress resources, such as ingress-nginx or AGIC

- Certificates, such as cert-manager.

- Private AKS clusters

- Private Azure DNS Zones

In any event, I hope this is useful and best of success in your AKS journey.