AKS with ingress-nginx

Using ingress-nginx add-on with Azure LB and AKS

This article covers adding an ingress controller called ingress-nginx to AKS (Azure Kubernetes Service). An ingress is a reverse proxy that allows routing web traffic to appropriate targeted services using a single load balancer.

Using example applications (Dgraph and hello-kubernetes), this article covers configuring an ingress to route traffic by an FQDN host name, which is configured automatically in Azure DNS using external-dns.

This article will configure the following components:

- Azure Cloud resources: AKS and Azure DNS Zone

- Kubernetes resources: ingress-nginx and ExternalDNS

- Example applications: hello-kubernetes and Dgraph.

Articles in the Series

These articles are part of a series, and below is a list of articles in the series.

- AKS with external-dns:

servicewithLoadBalancertype - AKS with ingress-nginx:

ingress(HTTP) - AKS with cert-manager:

ingress(HTTPS) - AKS with GRPC and ingress-nginx:

ingress(GRPC and HTTPS)

Previous Articles

In the previous article, I wrote about how to use external-dns with Azure DNS to configure DNS address records automatically when deploying Kubernetes application. This is configured through a service annotation.

The Helmfile tool will be explicitly used to template manifests and Helm chart values.

Requirements

These are some logistical and tool requirements for this article:

Registered domain name

As this tutorial uses a public domain name, so you will need to purchase this from somewhere to follow everything in this tutorial. This generally costs about $2 to $20 per year.

A fictional domain of example.com will be used as an example. Thus depneding on the examples used, there would behello.example.com, ratel.example.com, and alpha.example.com.

Alternatively you can either of these:

- use a private domain, such as

example.dev, so there would behello.example.dev,ratel.example.dev, andalpha.example.devdepending on the examples used. - skip using domains altogether, and instead use HTTP paths instead, such as

/hello,/ratel,/alphadepending on the examples used.

Required Tools

These tools are required.

- Azure CLI tool (

az): command line tool that interacts with Azure API - Kubernetes client tool (

kubectl): command line tool that interacts with Kubernetes API - Helm (

helm): command line tool for “templating and sharing Kubernetes manifests” that are bundled as Helm chart packages. - helm-diff plugin: allows you to see the changes made with

helmorhelmfilebefore applying the changes. - Helmfile (

helmfile): command line tool that uses a “declarative specification for deploying Helm charts across many environments”.

Optional Tools

I highly recommend these tools:

- POSIX shell (

sh), e.g. GNU Bash (bash) or Zsh (zsh): these scripts in this guide were tested using either of these shells on macOS and Ubuntu Linux. - curl (

curl): tool to interact with web services from the command line. - jq (

jq): a JSON processor tool that can transform and extract objects from JSON, as well as providing colorized JSON output greater readability.

Project Setup

As this project has a few moving parts (Azure DNS, AKS, ingress-nginx, ExternalDNS) with example applications Dgraph and hello-kubernetes, these next few will help keep things consistent.

Project File Structure

The following structure will be used:

~/azure_ingress_nginx/

├── env.sh

├── examples

│ ├── dgraph

│ │ └── helmfile.yaml

│ └── hello

│ └── helmfile.yaml

└── helmfile.yamlWith either Bash or Zsh, you can create the file structure with the following commands:

These instructions from this point will assume that you are in the ~/azure_ingress_nginx directory, so when in doubt:

cd ~/azure_ingress_nginxProject Environment Variables

Setup these environment variables below to keep things consistent amongst a variety of tools: helm, helmfile, kubectl, jq, az.

If you are using a POSIX shell, you can save these into a script and source that script whenever needed. Copy this source script and save as env.sh:

You will be required to change AZ_DNS_DOMAIN to a domain that you have registered, as example.com is already owned. Make sure you transfer domain control to Azure DNS.

Additionally, you can use the defaults or opt to change values for AZ_RESOURCE_GROUP, AZ_LOCATION, and AZ_CLUSTER_NAME.

This env.sh file will be used for the rest of the project. When finished with the necessary edits, source it:

source env.shResource Group

In Azure, resources are organized under resource groups.

az group create -n $AZ_RESOURCE_GROUP -l $AZ_LOCATIONAzure Cloud Resources

For simplicity, you can create the resources needed for this project with the following:

You will need to transfer domain management to Azure DNS for root domain like example.com, or if you are using sub-domain like dev.example.com, you’ll need to update DNS namespace records to point to Azure DNS name servers. This process is fully detailed as well as how to provision the equivelent with Terraform in Azure Linux VM with DNS article.

For a more robust script on provisioning Azure Kubernetes Service, see Azure Kubernetes Service: Provision an AKS Kubernetes Cluster with Azure CLI article.

Authorizing access Azure DNS

We need to allow access to the Managed Identity installed on VMSS node pool workers to the Azure DNS zone. This will allow any pod running a Kuberentes worker node to access the Azure DNS zone.

NOTE: A Managed Identity is a wrapper around service principals to make management simpler. Essentially, they are mapped to a Azure resource, so that when the Azure resource no longer exists, the associated service principal will be removed.

First we want to get the scope, that has a format like this:

/subscriptions/<subscription id>/resourceGroups/<resource group name>/providers/Microsoft.Network/dnszones/<zone name>/Extract the scope and service principal object-id with these commands and grant access using these commands:

Installing Addons

Now that the infrastructure is provisioned, we can install the required Kubernetes add-ons ingress-nginx and external-dns, and configure these to use the Azure DNS Zone for DNS record updates.

Copy the following below and save as helmfile.yaml:

Once ready, simply run:

source env.sh && helmfile applyExample using hello-kubernetes

The hello-kubernetes is a simple application that prints out the pod names. The script below includes an embedded ingress manifest that includes host name configuration value. This will be scanned by ExternalDNS, which will then trigger automation to configure an address record on the Azure DNS zone.

Copy the file below and save as examples/hello/helmfile.yaml:

Once ready, simply run:

source env.sh

pushd ./examples/hello/

helmfile apply

popd Verify Hello Kubernetes is deployed

kubectl get all,ing --namespace helloThis should produce something similar to this:

Verify Hello Kubernetes works

After a few moments, you can check the results http://hello.example.com (substituting example.com for your domain).

Example using Dgraph

Dgraph is a distributed graph database and has a helm chart that can be used to install Dgraph into a Kubernetes cluster. You can use either helmfile or helm methods to install Dgraph.

Dgraph Endpoint Security

There are two applications that are connected to the public Internet through the ingress: Dgraph Ratel and Dgraph Alpha. The Dgraph Ratel is just a client (React), so given its limitations, has a low risk to be exposed. The Dgraph Alpha service is another story, as this is the backend database and should never be exposed to the public Internet without some sort of restriction, such as network security group that limits in-bound traffic to your home office.

For demonstration purposes, securing the endpoints is not covered, but before rolling this into production, you will want to consider these options:

- Deploy an AKS cluster with a private subnet, so that internal load balancers can be used.

- Deploy an ingress that uses an internal load balancer private subnets, and use this ingress for the Dgraph endpoint.

- Use a SSH jump host or a VPN to access the private subnets for administrative or development purposes.

Dgraph Alpha Security

We can apply some security on the Dgraph Alpha service itself by adding an allow list (also called a whitelist):

# get AKS pod and service IP addresses

DG_ALLOW_LIST=$(az aks show \

--name $AZ_CLUSTER_NAME \

--resource-group $AZ_RESOURCE_GROUP | \

jq -r '.networkProfile.podCidr,.networkProfile.serviceCidr' | \

tr '\n' ','

)# append home office IP address

MY_IP_ADDRESS=$(curl --silent ifconfig.me)

DG_ALLOW_LIST="${DG_ALLOW_LIST}${MY_IP_ADDRESS}/32"

export DG_ALLOW_LIST

Deploy Dgraph

Copy the file below and save as examples/dgraph/helmfile.yaml:

When ready run the following:

source env.sh

pushd examples/dgraph/

helmfile apply

popdVerify Dgraph is deployed

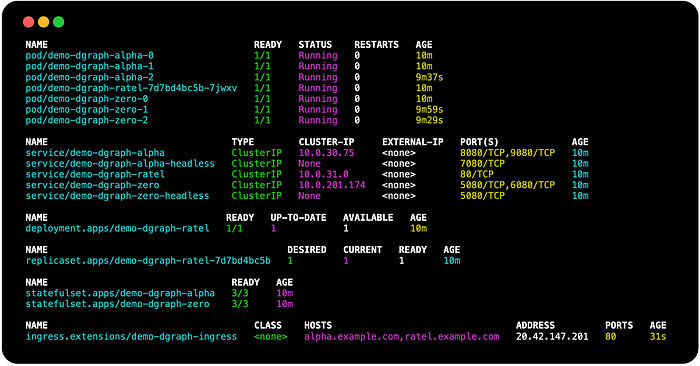

Check that the services are running:

kubectl get all,ing --namespace dgraphThe results should look something similar to this:

Verify Dgraph Alpha health check

Verify that the Dgraph Alpha is accessible by the domain name (substituting example.com for your domain):

curl --silent http://alpha.example.com/health | jqThis should look similar to the following:

Upload Data and Schema

There are some scripts adapted from tutorials https://dgraph.io/docs/get-started/ that you can down:

PREFIX=gist.githubusercontent.com/darkn3rd

RDF_GIST_ID=398606fbe7c8c2a8ad4c0b12926e7774

RDF_FILE=e90e1e672c206c16e36ccfdaeb4bd55a84c15318/sw.rdf

SCHEMA_GIST_ID=b712bbc52f65c68a5303c74fd08a3214

SCHEMA_FILE=b4933d2b286aed6e9c32decae36f31c9205c45ba/sw.schemacurl -sO https://$PREFIX/$RDF_GIST_ID/raw/$RDF_FILE

curl -sO https://$PREFIX/$SCHEMA_GIST_ID/raw/$SCHEMA_FILE

Once downloaded, you can then upload the schema and data with:

curl -s "alpha.$AZ_DNS_DOMAIN/mutate?commitNow=true" \

--request POST \

--header "Content-Type: application/rdf" \

--data-binary @sw.rdf | jqcurl -s "alpha.$AZ_DNS_DOMAIN/alter" \

--request POST \

--data-binary @sw.schema | jq

Connect to Ratel UI

After a few moments, you can check the results http://ratel.example.com (substituting example.com for your domain).

In the dialog for Dgraph Server Connection, configure the domain, e.g. http://alpha.example.com (substituting example.com for your domain)

Test Using the Ratel UI

In the Ratel UI, paste the following query and click run:

{

me(func: allofterms(name, "Star Wars"),

orderasc: release_date)

@filter(ge(release_date, "1980")) {

name

release_date

revenue

running_time

director {

name

}

starring (orderasc: name) {

name

}

}

}You should see something like this:

Cleanup Dgraph resources

You can remove Dgraph resources, load balancer, and external disks with the following:

helmfile --file examples/dgraph/helmfile.yaml delete

kubectl delete pvc --namespace dgraph --selector release=demoNOTE: If you delete the AKS cluster without going through this step, there will be disks left over that will incur costs.

Cleanup the Project

You can cleanup resources that can incur costs with the following:

Remove External Disks

Before deleting AKS cluster, make sure any disks that were used are removed, otherwise, these will be left behind an incur costs.

kubectl delete pvc --namespace dgraph --selector release=demoNOTE: These resources cannot be deleted if they are in use. Make sure that the resources that use the PVC resources were deleted, i.e. helm delete demo --namespace dgraph .

Remove the Azure Resources

This will remove the Azure resources:

az aks delete \

--resource-group $AZ_RESOURCE_GROUP \

--name $AZ_CLUSTER_NAMEaz network dns zone delete \

--resource-group $AZ_RESOURCE_GROUP \

--name $AZ_DNS_DOMAIN

Resources

Blog Source Code

- Blog Source Code: https://github.com/darkn3rd/blog_tutorials/blob/master/kubernetes/aks/series_1_endpoint/part_2_ingress_nginx/

Conclusion

This article shows how to use an ingress controller (ingress-nginx) combined with external-dns for automatic record creation. This was a follow-up article where I showed previously how to do the same thing with a service resource.

Some of the take-aways from this exercise include:

- An ingress only requires a single external load balancer per namespace for all endpoints.

- A service of type

LoadBalancerrequires a load balancer per endpoint. - The external load balancer are typically Layer 4 (TCP), where an ingress is Layer 7 (HTTP)

And some side notes along the way:

helmfilecan dynamically compose and deploy Helm charts and with raw helm chart, Kubernetes manifests.- How to use managed identities to allow pods running on AKS worker nodes (VMSS) to access Azure resources.

In the future, I will cover how to automate certificate creation with cert-manager add-on, so that you can have encrypted traffic from the client to the ingress endpoint.